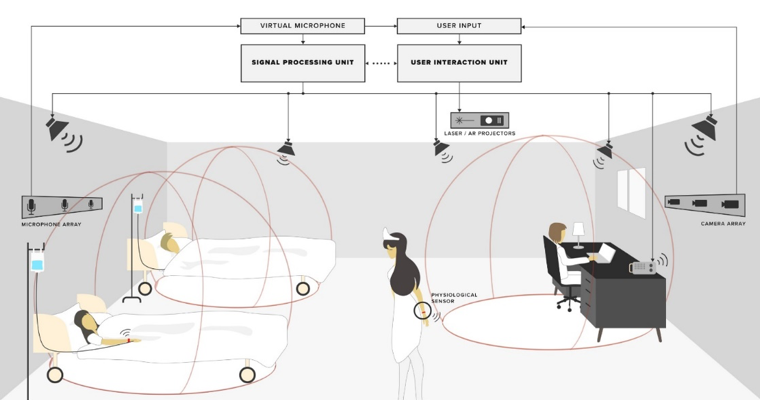

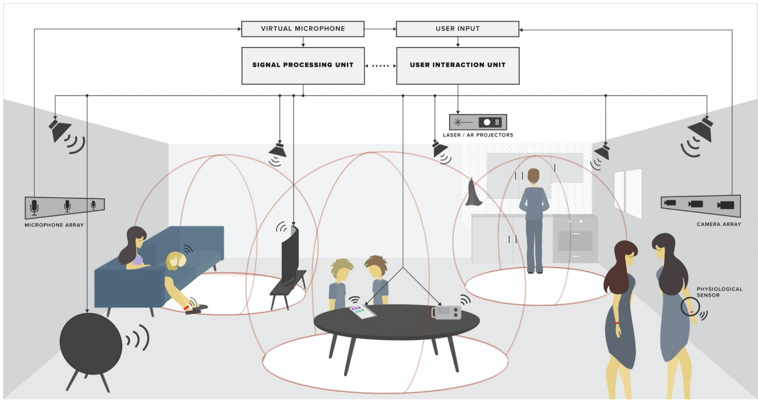

Fig 1.

The system consists of several speakers and microphones, a signal processing unit, sensors for user input, and units for visual output. The system is customizable and scalable and can be tailored to factors such as the size of the room, number of possible zones, level of sound separation, sound quality, user control and feedback, etc.

To create a sound zone from an array of speakers or soundbars, the audio sent to the speakers needs to be processed so that particular acoustic effects are achieved in different locations of the room.

The ISOBEL approach is to introduce an adaptive signal processing loop where the actual acoustic effect created is measured and returned to the sound processing algorithm, which then adjusts accordingly. Doing this, the sound zone can be optimized real-time, e.g. make it follow a listener or determine if an intended aim has been achieved.

As the approach requires data about how the sound zone is performing at the point of the listener, “virtual microphones” will be developed. Here, the transfer function from speakers to listener is estimated and sound pressure extrapolated from an arbitrarily placed mic array.

Dynamic sound zones rely on a large number of speakers. These need to be wireless to ease installation and allow portable units. However, as precise control of the signal at each speaker is required, and wireless network transmission of audio is highly error-prone, new network and audio signal coding algorithms need to be developed.

Network coding will be optimized by modelling common transmission loss artefacts and gauging their effect on the sound zone. Audio coding will be made adaptive based on the state of the network, e.g. sacrificing control for robustness. Network and audio coding will be combined for adaptiveness and low delay, and to consider changes in the environment like room conditions, network topologies, and moving audio signals and listeners.

Based on our research, and following the integration of software into several B&O speaker products, user interaction with dynamic sound zones in ISOBEL will make use of input and output techniques within the natural user interaction (NUIs) paradigm. The sound zone system will be interactive through background sensing and foreground control.

Important inputs are the location and emotional state of the listener. This will be detected with a range of sensors and used to develop a closed-loop interaction technique where sound zones optimize themselves (audio properties and content) to achieve an intended effect.

A significant challenge of dynamic sound zones is that they are invisible and intangible and, therefore, difficult to control. Building on the demonstrated potential of NUIs, the ISOBEL approach is to make sound zones visible using AR (e.g. projection, LED, laser), and controllable through tangibles and speech. For this, techniques will be developed to control boundary conditions of the self-regulating sound zone system (e.g. desired effect and limits of operation) with an optimal user experience.

To support a short timeframe for commercial sound zone products, the project will do sensing and interaction with existing consumer-grade technology, such as smartwatches, motion detection cameras, miniature projectors, and voice-based digital assistants.

However, extending the ongoing work in HCI, user interaction research will also involve cutting-edge experimental in and output technologies with slightly longer time perspectives before being available in commercial products. These include shape-changing physical devices for combining in- and output in one medium, next-generation physiological sensors for more accurate measurements of the users’ emotional state, and the use of pro-active digital assistants to nudge the user towards a desired behaviour.

Quality of Experience (QoE)

Development of the sound zone system relies on continual evidence on performance. Listener Quality of Experience (QoE) is vital to ensure that intended effects can be achieved. Understanding the relationship between QoE and sound zone properties is thus of enormous importance, requiring experimentation with audio perception. This is done through sensory analysis in the new unique SDM labs at AAU-SIP and B&O (Fig 2) and in our newly built sound zone prototype setup (Fig 3).

2. SDM Lab for Auralizing Sound Fields

The newly built SDM laboratory setup at B&O,

Struer. One of only three setups in the world

dedicated to auralizing sound fields using the

Spatial Decomposition Method (SDM).

3. Sound Zone Test Setup

The newly built sound zone prototype setup at

B&O, Struer. A similar prototype setup will be

established at AAU.

Tested in real-life

For validation of real-world performance and usability, three main iterations of prototypes will be deployed long-term in health care settings and home contexts.

Health deployments are done through established relations with North Zealand Hospital and Aalborg University Hospital, who have agreed to let ISOBEL use delivery rooms and intensive care units as test facilities. These will compare the effects of our sound zone prototype in different configurations against standard rooms, similar to the study of Wavecare’s sensory delivery rooms, as reported in Nature [28].

Data on this will be collected through logging of patients’ well-being and analysis of health records, combined with interviews with staff. Where possible, qualitative feedback from patients will also be received.

Home deployments are done with 15 households established through B&O’s user research department. These households will have experimental sound zone prototypes installed in their homes, integrated with their existing sound system setups. Users will then be free to use and experience the interactive sound zone system as part of their everyday lives. Data on this will be collected through logging of system use, physiological data, and interviews/observations with 1-1½ month intervals.

This will be combined with participatory design sessions where the families are encouraged to provide ideas on how they would like to interact with and use the system.